Xen Gpl Pv Driver Developers SCSI & RAID Devices Driver

The PV drivers are split into five packages: XenBus This is the key package that supports all other PV drivers. It provides the XENBUS driver which binds to either the XenServer variant of the (see in the hypervisor source repository) or the ubiquitous Xen Platform PCI Device, both of which are provided to HVM guests by QEMU. Provides Linux. driver for entry level 12Gbs Intel RAID Controllers supporting RAID 0, 1, 10, 1E. Driver: Red Hat Linux. SUSE Linux. Ubuntu. ph9.2-28.00.04.00 Latest:: Linux. Driver for Intel® RAID Modules RMS3VC160 and Intel® RAID Controller RS3UC080J, RS3GC008.

4 IDE; 256 SCSI 2 TB Microsoft Hyper-V Server 2012: 320 cores / 64 CPUs: 4 TB No limit 1024 64 1 TB 4 IDE; 256 SCSI 64 TB Microsoft Hyper-V Server 2016: 512 cores / 320 CPUs 24 TB No limit 1024 240 12 TB 4 IDE; 256 SCSI 64 TB Xen: 4095 CPUs 16TB No limit No limit 512 PV / 128 HVM 512GB PV / 1TB HVM?? XCP-ng 4095 CPUs 16TB No limit No limit. I have an extra scsi device sdb 0:0:1:0 to test scsi hot plug. I have tried following steps. Started windows 2003 GOS with /gplpv boot option 2. Attached scsi device with following command #xm scsi-attach Windows2003 0:0:1:0 0:0:1:0 I have seen a Xen SCSI Driver under SCSI and RAID.

Paravirtualized SCSI (PVSCSI) was added to Xen 3.3.0. PVSCSI allows high performance passthrough of SCSI devices (or LUNs) from dom0 to a Xen PV or HVM guest. PVSCSI can be used to passthrough a tape drive, tape autoloader or basicly any SCSI/FC device. By using PVSCSI the guest can have direct access to the SCSI device (required by for example some management tools). PVSCSI can also be used to passthrough multiple SCSI devices or the whole SCSI HBA.

Note that disks (any block device in dom0) can be passed to the Xen guest using the normal Xen blkback functionality, PVSCSI is not needed for that.

PVSCSI requirements

- PVSCSI requires a scsiback backend driver in dom0 kernel.

- PVSCSI requires a scsifront frontend driver in the guest kernel.

- Xen 3.3.0 or newer.

PVSCSI driver availability

PVSCSI drivers can be found from at least the following Xen kernel trees:

- Upstream Linux kernel version 3.18 (and later versions) includes Xen PVSCSI drivers, both xen-scsiback and xen-scsifront, out-of-the-box!

- linux-2.6.18-xen from XenProject.org contains both scsiback and scsifront.

- Xen PV-on-HVM drivers ('unmodified_drivers' in Xen source tree) contains PVSCSI scsifront driver for Linux 2.6.18, 2.6.27 and 2.6.32.

- SUSE SLES11 SP1 2.6.32 Xenlinux kernel (and later versions) has PVSCSI drivers.

- SUSE SLES12 Xenlinux kernel has PVSCSI drivers.

- Windows Xen GPLPV drivers have scsifront driver.

Xen toolstack support for PVSCSI features

- xm/xend toolstack supports PVSCSI since Xen 3.3, up to Xen 4.4.

- xl / libxl toolstack currently does not yet have support for PVSCSI (as of Xen 4.7).

Configuration

libxl/xend

The syntax in domU.cfg is vscsi=[ 'pdev,vdev[,options]', ... ]'pdev' describes the physical device, preferable in a persistent format such as /dev/disk/by-*/*.'vdev' is the domU device in vHOST:CHANNEL:TARGET:LUN notation, all integers.'options' lists additional flags which a backend may recognize.

xl/xm

xl scsi-attach domU 'pdev' 'vdev' ['options']

xl scsi-detach domU 'vdev'

libvirt

Very limited way to describe host device, just 'H:C:T:L' notation for pdev supported:

More information and links

- Xen Summit 2007 PVSCSI presentation slides: http://www-archive.xenproject.org/files/xensummit_fall07/19_Matsumoto.pdf

- Xen Summit Tokyo 2008 PVSCSI update presentation slides: http://www-archive.xenproject.org/files/xensummit_tokyo/24_Hitoshi%20Matsumoto_en.pdf

- PVSCSI usage with NPIV: http://lists.xensource.com/archives/html/xen-devel/2007-08/msg00700.html

- First version of PVSCSI (with usage examples): http://lists.xensource.com/archives/html/xen-devel/2007-07/msg00450.html

- Second version of PVSCSI: http://lists.xensource.com/archives/html/xen-devel/2007-10/msg00526.html

- Third version of PVSCSI: http://lists.xensource.com/archives/html/xen-devel/2007-10/msg00988.html

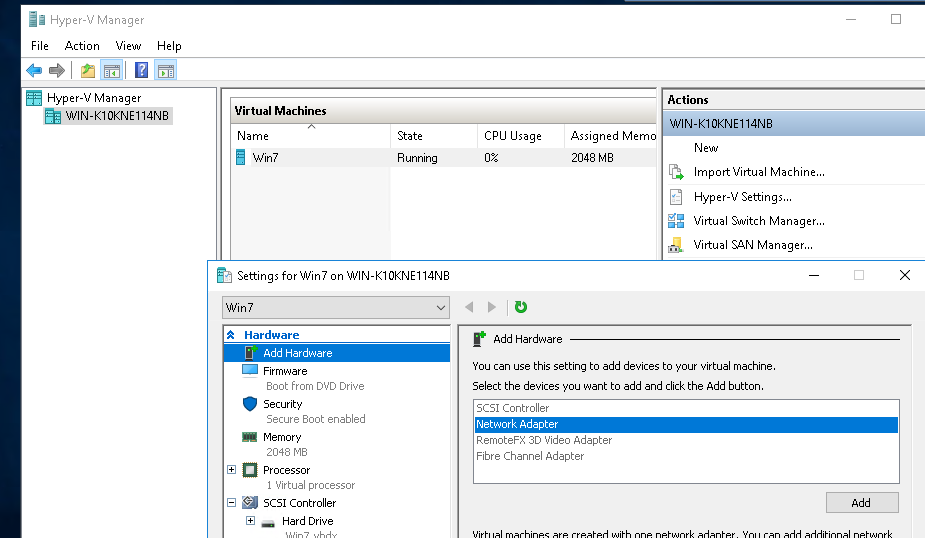

Howto install a Windows 2k8 r2 on a xen host with GPL PV drivers v0.10.0.130

- Contents

[Update: Signed drivers can be found here]

The machine is called 'slate', and has a single 32Gbyte disk. The xen host is running (a very old) Fedora 8 with xen-3.1.2-5.fc8.

Install Server 2008 R2

Assign a lump of disk (from a LVM volume) for the operating system (32Gbytes), and boot from the installation media DVD. Use a VNC client to step through the graphical installation. Use the following 'xm' style configuration:

Note: A Windows Vista x64 machine was used as the VNC client during installation. For some reason connecting to the VNC session over a Putty SSH tunnel using the loopback interface didn't work (the connection hung during the initial handshake). Binding to the ethernet interface of the Xen host, and going directly worked well.

GPL PV Drivers

Given the Windows machine is Server 2008 R2 x64, the drivers must have 'wlh' and 'amd64' in their name. The current revision is gplpv_fre_wlh_AMD64_0.10.0.130.

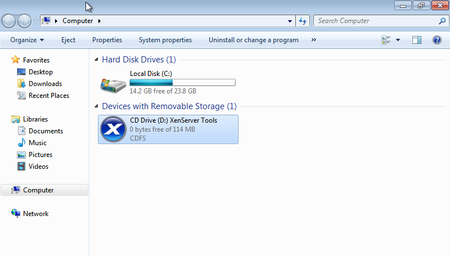

The installation of the drivers was very straightforward. Given the drivers are signed for 'test mode' operation, and a certificate is provided, the pain to get the drivers installed is reasonably low. I created an ISO image with the MSI install program on it, and added the ISO image as a CDROM device. This means the qemu network adapter doesn't have to be used or configured (but this would be another way to get the drivers onto the machine).

The 'xm' style VM configuration with the gpl pv driver as a second CDROM device is:

The four steps I followed are:

- set 'test signing' mode on

- reboot

- install driver MSI

- reboot

To enable test signing mode execute:

Once the driver was installed and the machine was rebooted, I configured a static IPv4 and IPv6 address on the network adapter, and enabled remote desktop.

Xen Gpl Pv Driver Developers Scsi & Raid Devices Driver Updater

Device manager indicated that the driver was being used, by the presence of the 'Xen Net Device Driver' and 'Xen PV Disk SCSI Disk Device'.

Note: The shutdown service works well 'out of the box'. Shutting the machine down from the xen host with 'xm shutdown slate' performs an orderly shutdown.

Running 'xm' configuration

Once the machine is up and running:

- change the boot device back to 'c' drive

- change the network interface to 'type=paravirtualised' (so that qemu-dm doesn't create a tap device)

Residual Issues

Given I am running an old version of xen in domain 0 (v3.1.2), there is an issue with using the GPL PV driver such that the CDROM devices no longer function, and the 'Intel(r) 82371SB PCI Bus Master IDE Controller' device has an issue.

Upgrading to the latest xen would solve this issue.

Links

- GPL PV v0.10.0.97 announcement

- Installing Xen Windows Gpl Pv (outdated)

- Driver Signature Enforcement Override

- Installing signed GPLPV drivers

Appendices

Windows Server 2008 R2 Installation gallery

Ready to install. Pick the time and currency format.

Select which version of the operating system to install.

Accept the license terms (like there is a real choice).

Xen Gpl Pv Driver Developers SCSI & RAID Devices Driver

Pick custom install (this is a fresh install, not an upgrade)

The install program creates a 100MByte partition. There was no choice.

Installation begins. Note that the machine does reboot during the install.

And reboots...

Set the Administrator password

Enter a new password

Overall the installation was reasonably easy and quick.

GPL PV Driver Installation Gallery

Once the 'bcdedit /set testsigning on' is run, and the machine has rebooted, the 'test mode' should display in the lower right of the desktop.

Run the GPL PV driver installation msi.

Accept the license.

The custom install shows what will be installed. Note how the 'test mode' signing certificate is installed.

Install.

Install the drivers, and always trust the 'GPLPV_Test_Cert'.

The installation is done.

Reboot the system to activate the drivers.

Xen Gpl Pv Driver Developers Scsi Operator

After rebooting, the device manager shows the following devices (Note: the system is using Xen 3.1.2 on the host - modern xen hosts may hide more)